Performance Problems & Performance Issues (edit)

The best programming language to learn now | InfoWorld

- Java is classic

- Python is new

- JavaScript is everywhere

How to Solve the Top 7 .NET Application Performance Problems (eginnovations.com)

Identifying performance issues in C# code using Benchmark DotNet - TechMeet360

How to avoid GC pressure in C# and .NET | InfoWorld

Don’t know about you, but I’m obsessed about performance. When my project has a performance problem, I take it as a personal offense and do not rest until that problem is found. That’s probably not the healthiest attitude, but we’re not here to talk about that. Let’s talk about performance problems.

This article will show 10 best practices on dealing with performance problems, starting with when you need to deal with them at all. You will see techniques to detect if a problem exists, find the specific cause, and fix it.

There’s enough to talk about performance in .NET to fill a book and actually, several books already exist on the subject [1] [2]. This article is a bird’s eye view on the matter of performance. I’ll show general techniques and strategies to approach problems, introduce you to the most useful tools, and point you in the right direction.

When to worry about performance

Did you ever hear the quote Premature Optimization Is the Root of All Evil? That’s a quote by Donald Knuth (kind of) that’s meant to say – don’t worry about optimization before there’s a problem.

This approach is often the right one to take. Micro-optimization, or worrying about performance during development can be a waste of time. Most likely the improvement you make won’t be in the critical path, will be negligible, or worst of all increase the complexity and readability of your code for a minimal advantage.

I very much agree with this approach, with some caveats. Those are:

- While premature optimization is bad, testing for performance is good. Repeatedly testing response times of your requests and measuring execution time of your algorithms is a good idea. If it can be done automatically with tests, even better.

- While premature optimization is bad, disregarding performance altogether is a folly. Use some basic common sense in your algorithms and architecture. For example, if you have frequently used data that never changes, and you keep pulling it from the database, go ahead and cache it in advance. There’s no need to wait until you have scaled to a million customers to make that optimization.

In most applications, performance issues aren’t going to be a problem. There are specific scenarios when you absolutely do need to worry about performance. Those are:

- In a high-load server, serving a lot of requests in high frequency. Something like Google’s search engine for example. Those kinds of servers are where performance matters most. A few optimizations can significantly decrease response time or save tens of thousands of dollars in cloud resources. In those servers, you should proactively test for performance all the time.

- In algorithms. Optimizing performance of a long-running algorithm can save the user a lot of time, or allow to handle a much higher load.

- In a library or a plugin. When you develop a library or a plugin, you are potentially being used in a high-load server or another type of performance-sensitive program. You are also living in the same process with other libraries and an application. Your code can be called in hot paths or you might use too many resources, hurting the overall performance of the process. It’s best to plan for the worst-case scenario in library and plugin development.

Let’s continue to some best practices.

1. Measure everything

When dealing with performance, unless you measure it, you’re in the dark. There are way too many factors in a .NET program to assume anything without measuring.

This brings the following questions: When to measure, What to measure, and How to measure.

When to measure:

- For scenarios like a high-load server, measure request times as part of your regular tests. If you can integrate measurements into your CI/CD process, even better.

- Measure during development when developing an algorithm or a piece of code that’s going to be used in a hot path.

- Measure when you’re dealing with an existing performance problem. You’ll be able to know when you’ve fixed the issue, and the measurement itself can help you to find the problem.

What to measure:

For development or testing, measuring execution time might be enough. When facing a specific performance problem, you can and should measure more metrics. With various tools, you can measure an abundance of information like CPU usage, % time waiting for GC, exception counters, time spent in a certain method and more. We’ll see how further on.

For a specific problem, you mostly should measure the smallest part that causes the problem. For example, suppose I have an unexplained delay when clicking a button. I will start measuring as closely as possible before the button is hit and stop measuring as soon as the delay is over. This will pinpoint my investigation just to the problematic delay.

How to measure:

Measuring performance is best done as closely as possible to a production environment setting. That means measuring in Release mode and without a debugger attached. Release mode ensures the code is optimized, which can make a big different in performance for specific scenarios. Similarly, attaching a debugger can also decrease performance.

Having said that, in my experience there’s a lot of insight to be had when measuring in debug mode as well. You just have to keep in mind some of the finding may be due to code optimization and an attached debugger

Optionally, you can measure the part of code after a certain warmup period. That is, run the code several times before starting to measure. This will make sure JIT compiling is not included in the measurement and possibly that the code and/or memory are in CPU cache.

Here are some ways you can measure performance:

- If you’re measuring a specific algorithm, which can be executed as a standalone method, you can use BenchmarkDotNet. It’s an open source project that became the industry standard for performance benchmarks in .NET.

- You can use a performance profiler like dotTrace or ANTS to record a runtime snapshot. Profiling gives you amazing insights into what happened during the recorded time. We’ll get back to profiling further on.

- If you’re measuring execution time in code, use the Stopwatch class. It’s much more accurate than using DateTime timestamps.

- There are profiling tools inside Visual Studio. Specifically, the Diagnostic Tools while debugging and profiling without the debugger. You might need VS Enterprise for some of those features.

2. Start using a performance profiler

A performance profiler is your Chef’s Knife when it comes to performance. You can use it to detect performance problems and pinpoint to the specific cause.

A performance profiler allows you to record a part of your program runtime, save it as a snapshot, and investigate it. You will be able to see:

- Time spent in each method

- Time in SQL requests

- Time in JIT compile

- Time garbage collecting

- Time for various I/O requests File and Network

- Time waiting for a lock to release

- Time in WPF/WinForms code (measuring, rendering, …)

- Time in Reflection code

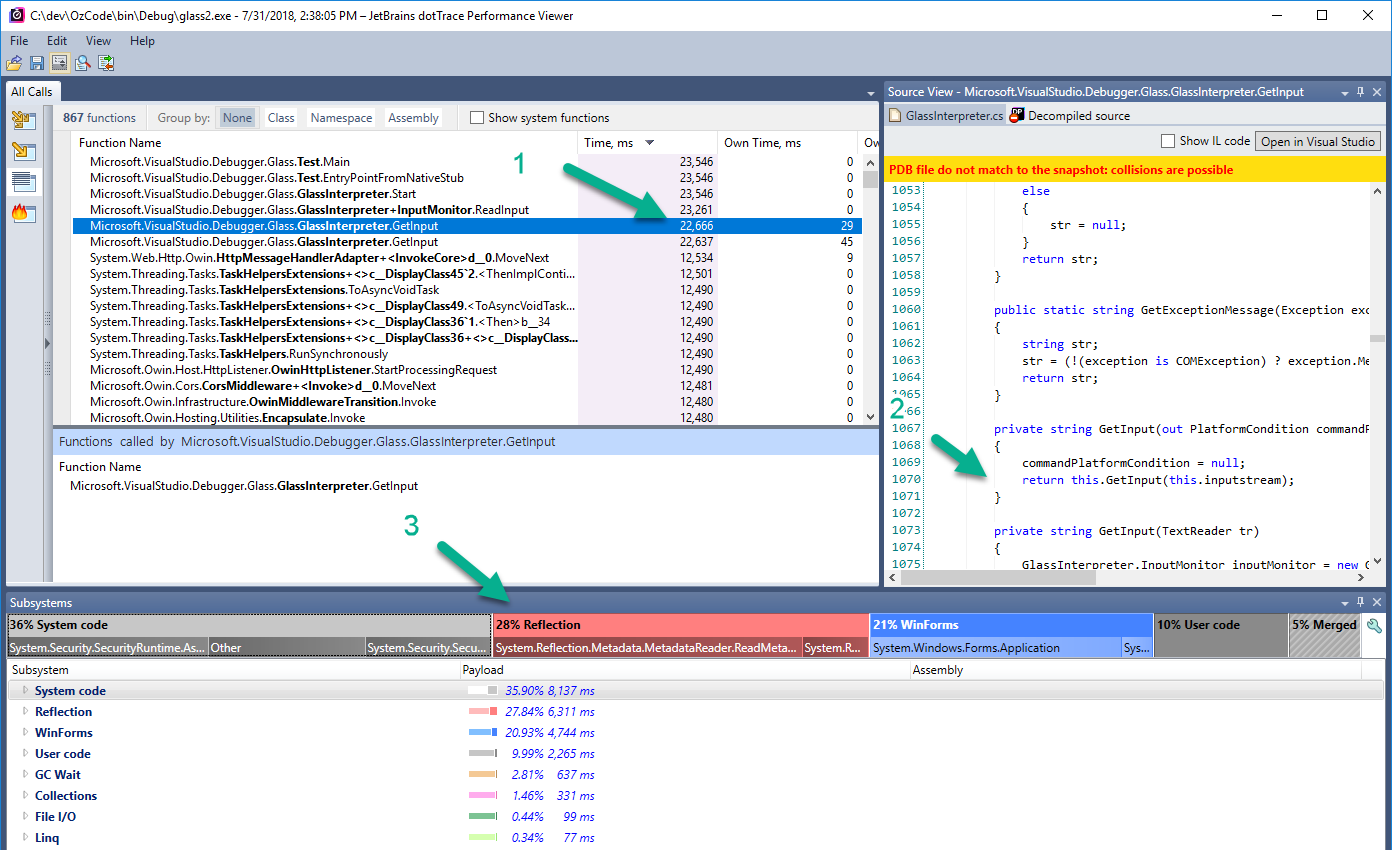

Here’s a screenshot of dotTrace while analyzing a snapshot:

Here’s what we see:

- The method GetInput took 22.6 seconds. Out of it, only 29 milliseconds were its own. The rest were by other methods it called.

- The (decompiled) source code of the method.

- The time (22.6 seconds) was divided as follows:

- 36% System code

- 28% Reflection

- 21% WinForms

- 10% User code

- 3% GC Wait (Garbage collection)

- And others

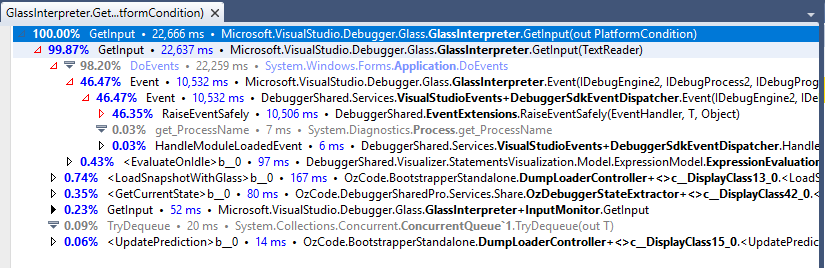

We can further explore the methods GetInput called in another screen:

Here we see that GetInput called another overload of GetInput, which called DoEvents, which called Event and so on. We can see how much time each individual method took in milliseconds and percentage.

In summary, a performance profiler is extremely useful to find and fix performance problems.

3. Mind the GC pressure

Garbage collection is very much tied to performance. I daresay that the most important thing to understand in a .NET process performance-wise is the GC.

While allocations are very cheap, GC is expensive and we should constantly keep an eye on it. Specifically, we should be looking at 2 metrics: % time spent in GC and Gen 2 collection frequency. Here’s why:

- % time spent in GC is the time your process spends in garbage collection. If we keep this to a minimum, our process will spend more time executing code. I heard different numbers, but generally speaking, a healthy process should spend up to 10%-20% in GC.

- Gen 2 collection frequency should be kept to a minimum. A gen 2 collection is also known as full garbage collection. It’s very expensive because it reclaims objects in all generations. If you have a lot of gen 2 collections, your process will be very slow and can notably hang in runtime.

We have 3 prime directives in dealing with the GC:

- Keep allocations to a minimum.

- Try to have allocated objects garbage collected as soon as possible. The GGC is optimized for short-lived objects.

- Have as little Gen 2 collections as possible. And Gen 1 collections for that matter.

There are many techniques and best practices to achieve that. In fact, I recently wrote the article 8 Techniques to Avoid GC Pressure and Improve Performance in C# .NET which will help you do just that.

Besides the techniques and optimizations I describe in the linked article, you should always be measuring GC behavior. Here are some ways you can measure GC behavior:

- While debugging, you can use the Diagnostics Tool Window to visualize Garbage Collection.

- A performance profiler like dotTrace will show you % time spent in GC.

- Windows Performance Counters contain useful counters for memory. These counters include both % Time in GC and Gen 2 Collections. Tools like PerfMon can visualize this, or you can get counter values in code. Here’s a great article explaining the meaning of GC performance counters.

4. Mind the JITter

.NET code is compiled to MSIL code instead of native code. This is the same trick used by Java JVM that allows Java to run everywhere. This magic happens with just-in-time compilation (JIT).

The JIT compiler converts MSIL code into native code the first time a method is called. This is pretty fast, but can still cause performance issues, especially at startup. Usually, the JIT is not going to be a problem and you won’t need to optimize anything. But, in some cases, you might want to tweak the JITter.

There are 3 modes to consider for JIT:

-

Normal JIT compilation – Works just as described. It’s usually the best mode, especially for long-running applications. For servers, and Asp.NET specifically, this is the preferred option.

-

Native images with Ngen.exe – Instead of just-in-time compilation, you can do ahead-of-time (AOT) compilation with a tool called Ngen.exe. The compilation is machine-specific, so it should be run after installation on the deployed machine. This usually means you will integrate Ngen.exe with your installer. When run, Ngen.exe compiles the MSIL code into Native Image Cache. This means that your process uses the image cache and no longer needs to do JIT. The result is a faster startup and smaller assembly size. Ngen is best used for desktop applications where startup time is more important. Don’t use this for an Asp.NET server.

-

ReadyToRun images – .NET Core 3 brings another type of AOT compilation: The ReadyToRun (R2R) format. This is already implemented in Mono runtime for a while. Unlike Ngen, R2R is not machine specific (but it is OS specific). That means it can be integrated with your regular build. It reduces startup time but increases assembly size because it contains both IL code and native code. .NET Core 3 is still in preview, so we’ll see how popular this optimization will be in the near future. This method should be used on client-installed applications (Desktop & Mobile).

5. Get to know (about) notable .NET performance tools (Performance counters, ETW events)

When talking about performance in .NET, there are some tools that have to be mentioned.

-

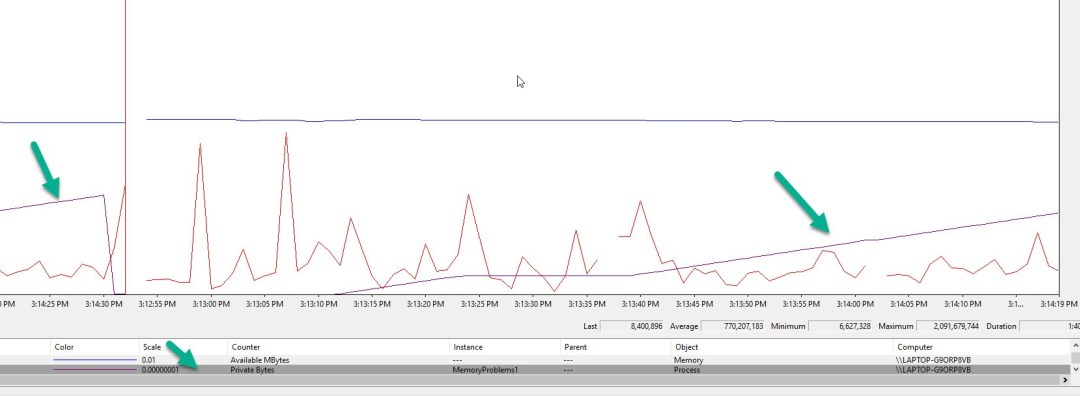

Performance Counters – Windows supports a feature called performance counters, which allows you to follow a whole lot of very useful metrics innately . You can use the PerfMon tool (included in Windows) to see them interactively. For example, here is me viewing some memory consumption counters:

You can follow exception counters, JIT counters, lock counters, networking counters, and garbage collections counters. Some notable counters are Process | % Processor Time which indicates CPU usage, .NET CLR Memory | % time in GC and .NET CLR Memory | Gen 2 collections which indicate GC pressure. Full list here and explanation on GC counters here.

-

ETW Logs – Windows has a built-in logging system called Event Tracing for Windows (ETW). It’s an extremely fast kernel level logging system that .NET and Windows uses internally. What does it mean for us? Well, 2 things.

For one, you can consume (listen to) logs from your process to analyze the performance. The PerfView tool is perfect for that. Those logs contain data on events like GC allocations and deallocations, JIT operations, kernel events, etc. Pretty much everything windows and .NET do is logged with ETW.

The second usage is using ETW yourself for logging. As mentioned, it’s extremely fast, so you’ll even be able to add logs to performance-sensitive hot paths.

Here’s a nice tutorial by Simon Timms on the matter.

For .NET Core, on non-Windows environments, use dotnet-counters as an alternative to PerfMon for performance counters. Similarly, dotnet-trace is an alternative for ETW logs. I didn’t try those tools, so please report in the comments on your experience if you did.

I would say that in many cases, a performance profiler will give you similar information in a more consumable matter. However, there are instances when using the above tools is better. Here are some reasons why you might prefer performance counters and ETW over a performance profiler:

- Counters and ETW are non-intrusive. They don’t disrupt the process like a profiler.

- Performance counters are relatively easy to use

- Sometimes you just can’t profile a specific machine for whatever reason.

- Those tools are free, whereas performance profiler licenses are costly.

6. Save execution time with Caching, but carefully

One important concept to improve performance is Caching. The basic idea is to reuse operation results. When performing some operation, we will save the result in our cache container. The next time that we need that result, we will pull it from the cache container, instead of performing the heavy operation again.

For example, you might need to pull product recommendations from the database. When doing so, you can save it in your in-memory cache container. Next time someone wants to view that same product, you can pull it from memory, saving a trip to the database.

Sounds like a great solution on first glance, but nothing is so easy in software engineering. Consider these possible complications:

- If another product recommendation is added or deleted, you need to know about it and refresh the caching. This adds complexity.

- If you leave your caching forever, you’ll eventually run out of memory. Eviction policies need to be set, adding complexity.

- In-memory caching takes up memory. It’s the worst kind of memory because it’s long-lived. This means it will be promoted to higher GC generations until finally garbage collected, encouraging more Gen 2 collections, which considerably slow down a process.

I mentioned in-memory caching, but you can also use persistent cache (file/database) or distributed cache with a service like Redis. Those will be slower but can solve a lot of the mentioned problems.

To summarize, caching is a very powerful tool that can considerably improve performance. It also comes with a price, which you should carefully consider when implementing. For more information about caching, you can read my article Cache Implementations in C# .NET

7. Optimize UI Responsiveness

When a user interacts with UI, any response under 0.1-0.2 seconds feels instantaneous [1] [2]. 1 second is the limit for the user’s flow of thought to stay uninterrupted. Anything more than 1 second, the user feels they are no longer in control of the interaction.

Here are some rules of thumb in regards to UI performance:

- 0.1-0.2 seconds is a lot of time for a computer. If you’re responding within that time frame, there’s no need for further optimization.

- Whatever you do, don’t freeze the UI thread in client applications. Nothing feels more buggy than an unresponsive window. The async/await pattern is perfect to send work to another thread, then come back to the UI thread when finished.

- When an operation takes a while, give some immediate feedback that the operation started. It’s an infinitely better experience than having the user wait a few seconds after a button click with no feedback at all. A progress bar is great. It shows the system is working, and possibly when it’s going to be finished.

- For operations that don’t require an immediate result, consider using a Job Queue. Have the user know that his job started and let him continue interaction with your system. It’s a perfect solution to level out peak times. Job Queues have many benefits for performance. For more information, check out my article series called Job Queue Implementations in C#.

8. Optimize only the critical path

When solving a specific problem, it’s important to know where to put in the work. In other words, you can spend a lot of effort fixing something that doesn’t make an effect.

The critical path is an important concept in performance. It’s the longest sequence of activities in a task that must be completed for the task to be ready. It’s a bit confusing, so I’ll give an example.

Suppose you work on a web application that does image filters. When the user clicks Filter you do 3 operations simultaneously:

- Add telemetry data on the user’s action (10 milliseconds)

- Apply the filter (25 seconds)

- Backup the initial image to history (10 seconds)

Suppose that you can easily optimize the backup operation to take 5 seconds instead of 10. Should you do it? Well, there’s no point. Since the operations are done simultaneously, the operation will still take 25 seconds. The only operation that’s worth optimizing is the critical path, which is applying the filter.

9. Micro-optimize Hot Paths

Hot paths are code sections in which most of the execution time is spent. They often execute very frequently.

While micro-optimizations are usually unnecessary, hot paths are the one place where they are very helpful. There are lots and lots of optimizations you can do. Here are some of them:

- Avoid LINQ in favor of regular arrays.

- Prefer for loops over foreach loops for non-array direct-access collections like List<T>.

- Allocate as little memory as possible. You can make use of structs, stackalloc, and Span to avoid heap allocations. You can use shared memory like System.Buffers.ArrayPool to reuse memory.

- Use StringBuilder and array concatenations correctly.

- Use Buffer.BlockCopy if you have to copy arrays.

10. Beware of common performance offenders

There are several patterns .NET that you should avoid as much as possible. The following are proven performance offenders:

- Finalizers are bad for performance. Any class with a finalizer can’t be garbage collected in Gen 0, which is the fastest generation. It will be promoted at least one generation. The rule of thumb is to have objects de-allocated as fast as possible or not at all. As mentioned, the GC is optimized for short-lived objects. When you must use finalizers with something like the Dispose Pattern, make sure to call Dispose and suppress the finalizer.

- Throwing Exceptions as normal behavior is bad practice. While adding try/catch is cheap, actually throwing an exception is expensive. For example. checking if something can be parsed by calling int.Parse() and catching an exception, should be avoided. Instead, you can use int.TryParse() which returns false if parsing is impossible.

- Reflection in .NET is very powerful, but expensive. Try avoiding reflection as much as possible. Operations like Activator.CreateInstance() and myType.GetMethod("Foo").Invoke() will hurt performance. That’s not to say you should not use it, just keep in mind not to use it in performance-sensitive scenarios and hot paths.

- The dynamic type is particularly expensive. Try avoiding it entirely in hot paths.

- Multi-threaded synchronization is really hard to get right. Whenever you have locks and threads waiting for each other, you have a potential performance problem on your hands. Not to mention possible deadlocks. If possible, try avoiding locks altogether.

- As mentioned already, memory and performance in .NET are tied to each other. Bad memory management will affect performance. Specifically, try to avoid Memory Leaks and GC Pressure.

Summary

A big part of almost any application is the Database. DB optimizations are obviously important, but they aren’t directly related to .NET. It’s a huge subject on its own, which I’ll leave for another post.

.NET is elusively simple, which gives the impression you can do anything without repercussions. While that’s true to some extent, you can easily get into trouble when you don’t have in-depth knowledge. Moreover, it can go unnoticed until you’ve scaled to a million users and it’s too late.

The important things that can get you into trouble when your application has grown are those that are hardest to fix. It might be fundamentally bad architecture, incorrect memory management, and badly planned database interaction. Just keep in mind that your application might grow huge or wildly successful with a billion users. Cheers.